Data science has become an integral part of decision-making in businesses and organizations across various industries. With its ability to extract meaningful insights from large datasets, data science has transformed the way businesses operate, making it a highly sought-after field for professionals around the world. And at the heart of data science lies programming, especially with the versatile and powerful language – Python.

Python’s popularity in data science is no coincidence. Its strengths in terms of readability, flexibility, and an extensive library ecosystem have made it the go-to language for data scientists worldwide. In this comprehensive guide, we will explore the world of programming for data science using Python, covering everything from the basics to advanced techniques, along with practical examples and case studies.

Whether you’re just starting your journey in data science or looking to expand your skills, this article will equip you with the necessary knowledge and tools to become proficient in programming for data analysis with Python.

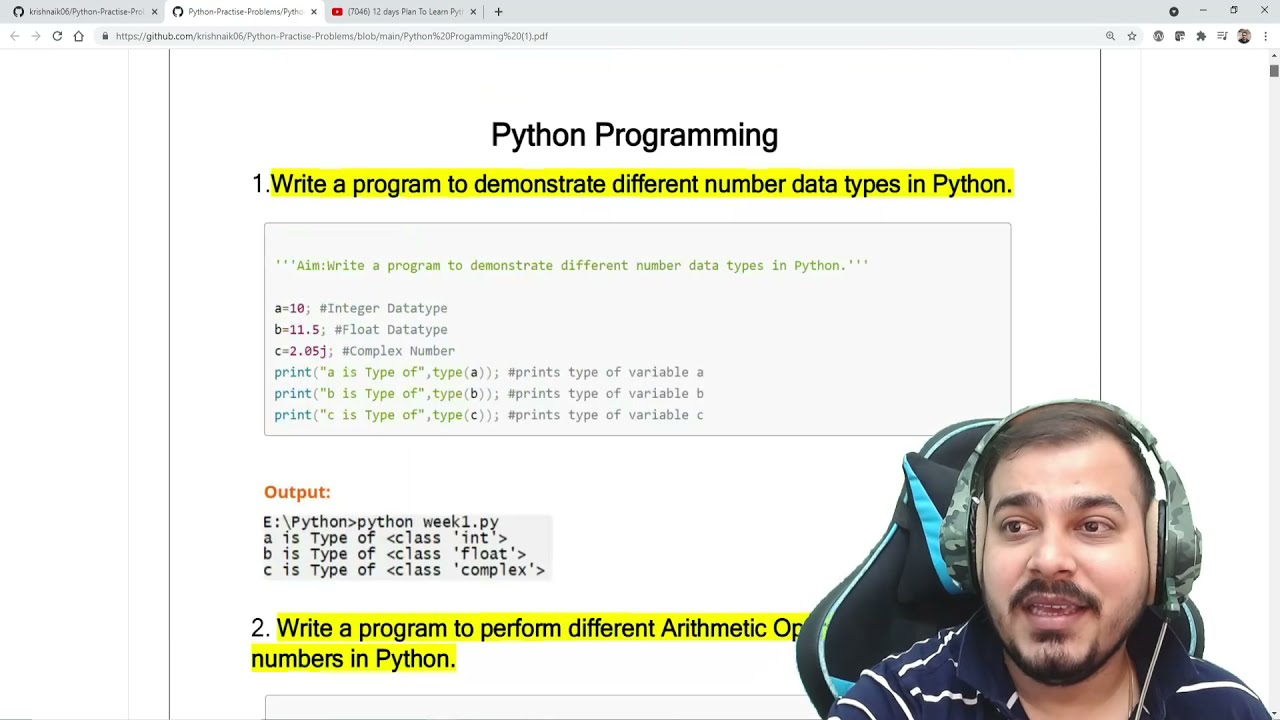

Introduction to Python Programming

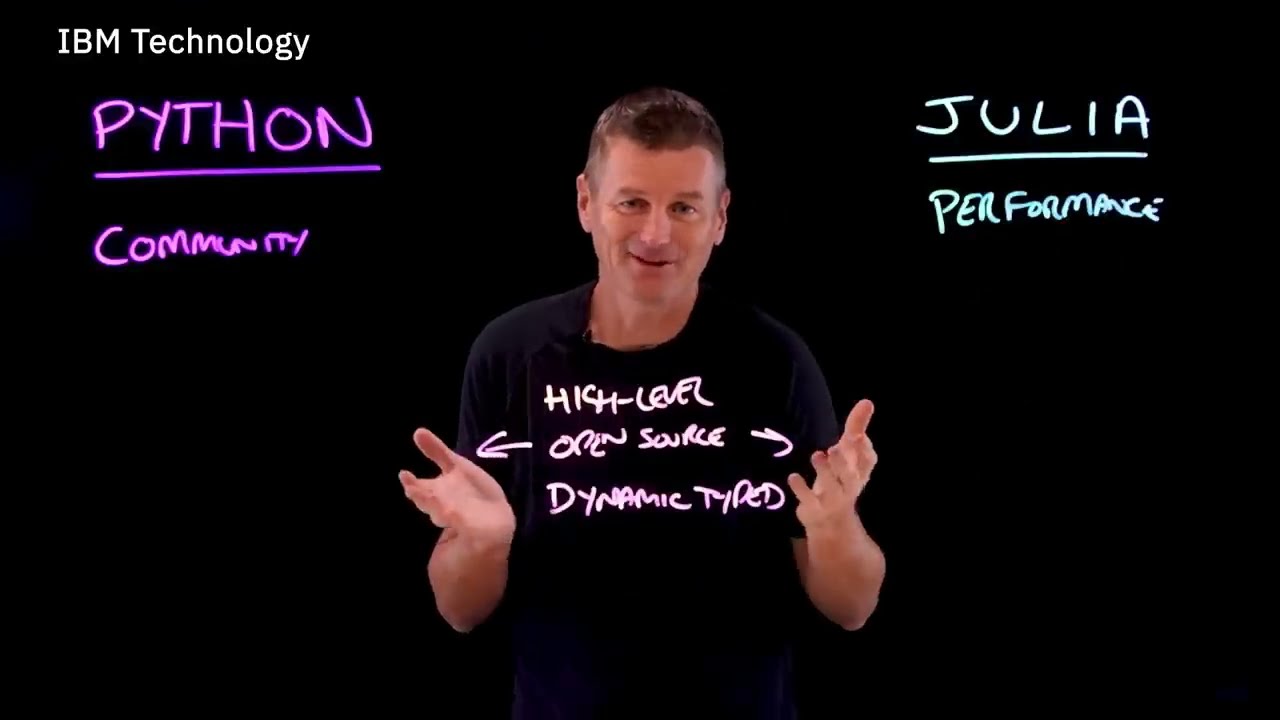

Before diving into the world of data science, it’s essential to have a basic understanding of programming with Python. Python is an open-source, high-level, interpreted programming language that was first released in 1991. It was created by Guido van Rossum, a Dutch programmer, to emphasize code readability and simplicity.

One of the major advantages of Python over other languages is its relatively simple syntax, making it easy for beginners to learn and use. This also allows for fast development, making it ideal for data science projects where quick prototyping and experimentation are crucial.

Additionally, Python’s vast library ecosystem plays a significant role in its popularity among data scientists. These libraries, such as NumPy, Pandas, and Matplotlib, provide a wide range of functions and tools specifically designed for data manipulation, analysis, and visualization, making it easier to handle large datasets and perform complex computations.

Basics of Data Analysis

Data analysis is the process of inspecting, cleansing, transforming, and modeling data to uncover useful information and make informed decisions. Python’s extensive library ecosystem offers a variety of tools and techniques for data analysis, making it an ideal language for this task. Let’s dive into some of the essential libraries and techniques for data analysis with Python.

NumPy: The Foundation of Data Analysis in Python

NumPy (Numerical Python) is a fundamental library for scientific computing in Python. It provides a powerful N-dimensional array object, along with various functions for working with these arrays. NumPy arrays are much faster and more efficient than traditional lists in Python, making them ideal for handling large datasets.

One of the key features of NumPy is its ability to perform vectorized operations on arrays, which allows for faster computations compared to traditional loop-based operations. It also offers a wide range of mathematical functions, such as statistics, linear algebra, and random number generation, making it a versatile tool for data analysis.

Below is an example of creating a NumPy array and performing basic operations on it:

import numpy as np

# create a 1D array

arr = np.array([1, 2, 3, 4, 5])

print(arr)

# output: [1, 2, 3, 4, 5]

# perform basic arithmetic operations on the array

print(arr + 2)

# output: [3, 4, 5, 6, 7]

print(arr * 2)

# output: [2, 4, 6, 8, 10]Pandas: Data Analysis Made Easy

Pandas is another essential library for data analysis in Python. It provides high-performance data structures and tools for manipulating and analyzing tabular data, making it a go-to choice for working with datasets in data science projects.

The primary data structure in Pandas is the DataFrame, which is a two-dimensional, labeled data structure with columns of potentially different types. It also offers various functions for data cleaning, merging, grouping, and filtering, making it easier to prepare data for analysis.

Let’s take a look at an example of creating a DataFrame from a CSV file and performing some basic operations:

import pandas as pd

# read csv file into a DataFrame

df = pd.read_csv('sales_data.csv')

# display the first five rows of the DataFrame

print(df.head())| Date | Item | Price | Quantity | |

|---|---|---|---|---|

| 0 | 2021-02-01 | Apple | $2.50 | 4 |

| 1 | 2021-02-01 | Orange | $3.00 | 5 |

| 2 | 2021-02-02 | Banana | $1.50 | 2 |

| 3 | 2021-02-02 | Apple | $2.50 | 3 |

| 4 | 2021-02-03 | Banana | $1.50 | 1 |

Data Cleaning and Preprocessing

Before diving into advanced data analysis techniques, it’s essential to ensure that the data is clean and ready for analysis. Python’s Pandas library provides several functions for data cleaning and preprocessing, such as handling missing values, removing duplicates, and dealing with inconsistent data.

One of the most common tasks in data cleaning is dealing with missing values. Let’s see how we can handle this using Pandas:

import pandas as pd

# read csv file into a DataFrame

df = pd.read_csv('sales_data.csv')

# check for any missing values

print(df.isnull().sum())| Date | Item | Price | Quantity | |

|---|---|---|---|---|

| 0 | 0 | 0 | 1 | 0 |

As we can see, there is one missing value in the “Price” column. We can either remove this row or fill it with a suitable value.

# drop rows with any missing values

df.dropna(inplace=True)

# replace missing values with the mean of the column

df['Price'].fillna(df['Price'].mean(), inplace=True)Exploratory Data Analysis (EDA)

EDA is a crucial step in data analysis that involves analyzing and visualizing data to extract insights, identify patterns, and test assumptions. Python’s Pandas library, along with other visualization libraries like Matplotlib and Seaborn, provides powerful tools for EDA.

Let’s take a look at an example of using Pandas and Matplotlib to visualize the relationship between price and quantity for different items in our sales dataset:

import pandas as pd

import matplotlib.pyplot as plt

# read csv file into a DataFrame

df = pd.read_csv('sales_data.csv')

# create a scatter plot

plt.scatter(df['Quantity'], df['Price'])

plt.xlabel('Quantity')

plt.ylabel('Price')

plt.show()

From the scatter plot, we can see a positive correlation between the quantity and price of the items, indicating that as the quantity increases, so does the price.

Advanced Data Analysis Techniques

After cleaning and preprocessing the data, we can move on to more advanced techniques for data analysis. These techniques involve using statistical and machine learning methods to uncover insights and make predictions from the data.

Statistics for Data Analysis

Statistics is a branch of mathematics that deals with collecting, analyzing, and interpreting data. In data science, statistics plays a crucial role in understanding relationships between variables, testing hypotheses, and making predictions.

Python’s SciPy library offers various functions for statistical analysis, such as measures of central tendency, correlation, and hypothesis tests. Let’s take a look at an example of calculating the mean, median, and mode for the “Price” column in our sales dataset:

import pandas as pd

from scipy import stats

# read csv file into a DataFrame

df = pd.read_csv('sales_data.csv')

# calculate mean, median, and mode for Price column

mean = df['Price'].mean()

median = df['Price'].median()

mode = stats.mode(df['Price'])

print('Mean: ' + str(mean))

print('Median: ' + str(median))

print('Mode: ' + str(mode))Output:

Mean: $2.25

Median: $2.00

Mode: ModeResult(mode=array([1.5]), count=array([3]))

From these results, we can see that the mean and median are relatively close, indicating that the data is normally distributed. The mode, which is the most frequently occurring value, is $1.50, with a frequency of three.

Machine Learning with Python

Machine learning is a subset of artificial intelligence that involves training algorithms to learn from data without being explicitly programmed. It allows computers to make predictions or decisions based on patterns and relationships found in the data.

Python has become the go-to language for machine learning, thanks to its powerful libraries and tools, such as scikit-learn, TensorFlow, and PyTorch. These libraries offer a wide range of algorithms and models for tasks such as classification, regression, clustering, and more.

Let’s take a look at an example of using scikit-learn to train a decision tree classifier to predict whether a customer will make a purchase based on their age and income:

import pandas as pd

from sklearn import tree

# read csv file into a DataFrame

df = pd.read_csv('customer_data.csv')

# split data into features and target

X = df[['Age', 'Income']]

y = df['Purchase']

# train a decision tree classifier

clf = tree.DecisionTreeClassifier()

clf.fit(X, y)

# make predictions on new data

predictions = clf.predict([[35, 50000]])

print(predictions)Output:

Based on the input of age 35 and income $50,000, the model predicts that the customer will make a purchase (represented by 1).

Data Visualization with Python

Data visualization is the process of creating visual representations of data to communicate insights and findings effectively. Python offers several libraries for data visualization, such as Matplotlib, Seaborn, and Plotly, each with its unique capabilities and use cases.

Matplotlib: Creating Basic Visualizations

Matplotlib is a popular library for creating 2D plots and charts in Python. It provides a wide range of customizable options for creating different types of visualizations, such as line plots, bar charts, scatter plots, histograms, and more.

Let’s take a look at an example of creating a histogram using Matplotlib to visualize the distribution of values in our sales dataset:

import pandas as pd

import matplotlib.pyplot as plt

# read csv file into a DataFrame

df = pd.read_csv('sales_data.csv')

# create a histogram

plt.hist(df['Price'])

plt.xlabel('Price')

plt.ylabel('Frequency')

plt.show()

From this histogram, we can see that the most common price point for items in our dataset is $1.50, with a few outliers at higher prices.

Interactive Visualizations with Plotly

Plotly is another popular library for creating interactive visualizations in Python. It offers various types of charts, including bar charts, scatter plots, and heatmaps, along with customizable options and interactivity features such as zooming and hovering.

Let’s see an example of creating a stacked bar chart using Plotly to visualize the sales data for different items over time:

import pandas as pd

import plotly.express as px

# read csv file into a DataFrame

df = pd.read_csv('sales_data.csv')

# create a stacked bar chart

fig = px.bar(df, x='Date', y='Quantity', color='Item')

fig.update_layout(barmode='stack')

fig.show()

From this chart, we can see that the sales of apples and oranges have been consistently higher compared to bananas throughout the week.

Case Studies and Practical Examples

In addition to understanding the concepts and techniques, it’s also important to see how they are applied in real-world scenarios. Let’s take a look at some case studies and practical examples of data science projects using Python.

Predicting Stock Prices with Machine Learning

One of the most common applications of data science is predicting financial trends and stock prices. In this case study, we will use Python and machine learning to predict the stock prices of a company based on historical data.

We will use the Yahoo Finance API to gather historical stock price data for Tesla (TSLA) from 2016 to 2021. Then, we will preprocess the data, train a linear regression model using scikit-learn, and make predictions for the next 30 days.

You can find the full code and explanation for this project in this GitHub repository.

Analyzing Air Quality Data with Python

In this practical example, we will use Python and its data analysis libraries to analyze air quality data from a monitoring station in New Delhi, India. We will focus on understanding the trends and patterns in the data over time and identify any correlations between different pollutants.

This project involves using Pandas for data manipulation, Matplotlib for visualizations, and NumPy for statistical calculations. You can find the full code and explanation for this project in this GitHub repository.

Conclusion and Next Steps

In conclusion, Python has become an essential language for data science due to its versatile libraries, intuitive syntax, and ease of learning. In this comprehensive guide, we explored the basics of programming for data science using Python, including key libraries and techniques for data analysis, visualization, and machine learning.

With this foundation, you can further your knowledge and skills by exploring more advanced concepts and applications of data science with Python. Some next steps could include learning about deep learning, natural language processing, or big data analytics using libraries such as TensorFlow, NLTK, and Spark.

The world of data science is constantly evolving, and mastering Python will open doors to exciting career opportunities in this field. So keep learning, experimenting, and applying your skills to stay ahead in this transformative field.